MariaDB Active-Passive Replication: Complete Guide to Setup, Failover, and Monitoring

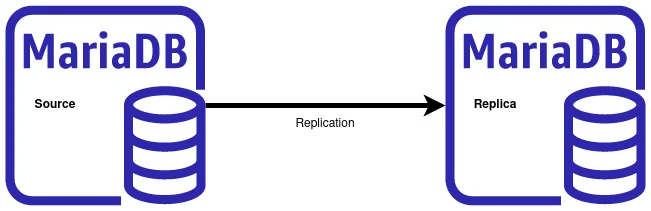

Introduction to MariaDB Active-Passive Replication

Database availability is critical for modern applications. When your MariaDB database goes down, your entire application typically goes with it. Active-passive replication (also called primary-replica or master-slave replication) provides a proven solution for high availability, disaster recovery, and read scalability.

In an active-passive setup, one server (the primary) handles all write operations while one or more replica servers maintain synchronized copies of the data. If the primary fails, you can promote a replica to become the new primary, minimizing downtime. This architecture is essential for disaster recovery planning and maintaining business continuity.

This comprehensive guide walks you through setting up MariaDB replication, maintaining it in production, performing emergency failovers, and implementing proactive monitoring to catch problems before they cause outages.

Understanding MariaDB Replication Architecture

Before diving into configuration, it's important to understand how MariaDB replication works. The primary server writes all database changes to binary logs (binlogs). Replica servers connect to the primary, read these binary logs, and replay the same changes on their local databases.

There are two primary replication formats:

- Statement-based replication (SBR): Replicas execute the same SQL statements that ran on the primary. This is compact but can cause inconsistencies with non-deterministic functions.

- Row-based replication (RBR): Replicas receive the actual row changes. This is more reliable and consistent, especially for complex queries, but generates larger binlog files.

- Mixed replication: MariaDB intelligently switches between statement and row-based replication based on the query type.

For production environments, row-based or mixed replication is recommended for maximum consistency and reliability.

Prerequisites and Planning

Before setting up replication, ensure you have:

- Two or more MariaDB servers (10.5+ recommended for best features)

- Network connectivity between servers on port 3306 (or your custom port)

- Sufficient disk space on replicas to store the full database plus binlogs

- A backup strategy already in place

- Root or administrative access to all servers

- Matching MariaDB versions (or replicas on same/newer version than primary)

Plan your server roles carefully. In this guide, we'll use:

- db-primary: 192.168.1.10 (active primary server)

- db-replica1: 192.168.1.11 (passive replica)

Step 1: Configure the Primary Server

First, configure the primary server to enable binary logging and set a unique server ID.

Edit the MariaDB Configuration

On the primary server, edit /etc/mysql/mariadb.conf.d/50-server.cnf (Debian/Ubuntu) or /etc/my.cnf.d/server.cnf (RHEL/CentOS):

[mariadb]

# Unique server ID (must be different on each server)

server-id = 1

# Enable binary logging

log-bin = /var/log/mysql/mariadb-bin

log-bin-index = /var/log/mysql/mariadb-bin.index

# Replication format (ROW recommended for consistency)

binlog-format = ROW

# Optional: Limit binlog retention to prevent disk filling

expire_logs_days = 7

# Optional: Database to replicate (omit to replicate all databases)

# binlog-do-db = your_database_name

# Optional: Databases to exclude from replication

# binlog-ignore-db = mysql

# binlog-ignore-db = information_schema

# Network binding - ensure replicas can connect

bind-address = 0.0.0.0

# Optional but recommended: Enable GTID for easier failover

gtid_strict_mode = 1

log_slave_updates = 1

Ensure the log directory exists and has proper permissions:

sudo mkdir -p /var/log/mysql

sudo chown mysql:mysql /var/log/mysql

Restart MariaDB

sudo systemctl restart mariadb

sudo systemctl status mariadb

Create a Replication User

Connect to MariaDB and create a dedicated user for replication:

sudo mysql -u root -p

CREATE USER 'replication_user'@'192.168.1.%' IDENTIFIED BY 'your_secure_password_here';

GRANT REPLICATION SLAVE ON *.* TO 'replication_user'@'192.168.1.%';

FLUSH PRIVILEGES;

The 192.168.1.% wildcard allows any server on that subnet to connect. Adjust this to match your network configuration.

Note the Binary Log Position

Record the current binary log file and position (needed for initial replica setup):

SHOW MASTER STATUS;

You'll see output like:

+--------------------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+--------------------+----------+--------------+------------------+

| mariadb-bin.000001 | 328 | | |

+--------------------+----------+--------------+------------------+

Save these values - you'll need them when configuring the replica.

Step 2: Create a Consistent Database Snapshot

Before starting replication, you need to copy the current database state to the replica. There are several approaches:

Method 1: Using mysqldump (Smaller Databases)

For databases under 100GB, mysqldump is straightforward:

# Lock tables and record position

sudo mysql -u root -p -e "FLUSH TABLES WITH READ LOCK; SHOW MASTER STATUS;" > /tmp/master-status.txt

# In a separate terminal, create the backup

sudo mysqldump -u root -p --all-databases --master-data=2 --single-transaction --routines --triggers > /tmp/full-backup.sql

# Unlock tables

sudo mysql -u root -p -e "UNLOCK TABLES;"

# Transfer to replica

scp /tmp/full-backup.sql user@192.168.1.11:/tmp/

Method 2: Using Mariabackup (Larger Databases)

For larger databases or minimal downtime requirements, use Mariabackup (MariaDB's hot backup tool):

# Install mariabackup if not present

sudo apt-get install mariadb-backup # Debian/Ubuntu

sudo yum install MariaDB-backup # RHEL/CentOS

# Create backup (runs while database is online)

sudo mariabackup --backup --target-dir=/backup/mariadb --user=root --password=your_password

# Prepare the backup

sudo mariabackup --prepare --target-dir=/backup/mariadb

# Transfer to replica

sudo rsync -avz /backup/mariadb/ user@192.168.1.11:/var/lib/mysql/

Step 3: Configure the Replica Server

Edit Replica Configuration

On the replica server, edit the MariaDB configuration file:

[mariadb]

# Unique server ID (different from primary!)

server-id = 2

# Read-only mode (prevents accidental writes to replica)

read_only = 1

# Enable binary logging on replica (required for GTID and chained replication)

log-bin = /var/log/mysql/mariadb-bin

log-bin-index = /var/log/mysql/mariadb-bin.index

binlog-format = ROW

# Relay log settings

relay-log = /var/log/mysql/relay-bin

relay-log-index = /var/log/mysql/relay-bin.index

# GTID settings (must match primary)

gtid_strict_mode = 1

log_slave_updates = 1

# Network binding

bind-address = 0.0.0.0

# Optional: Skip errors (use cautiously, only for specific error codes)

# slave-skip-errors = 1062,1053

Restore the Database Backup

If using mysqldump:

sudo mysql -u root -p < /tmp/full-backup.sql

If using Mariabackup, the data is already in place - just set proper permissions:

sudo chown -R mysql:mysql /var/lib/mysql

Restart MariaDB on Replica

sudo systemctl restart mariadb

sudo systemctl status mariadb

Step 4: Start Replication

Connect to the replica server and configure it to replicate from the primary:

sudo mysql -u root -p

CHANGE MASTER TO

MASTER_HOST='192.168.1.10',

MASTER_USER='replication_user',

MASTER_PASSWORD='your_secure_password_here',

MASTER_PORT=3306,

MASTER_LOG_FILE='mariadb-bin.000001',

MASTER_LOG_POS=328,

MASTER_CONNECT_RETRY=10;

START SLAVE;

Replace MASTER_LOG_FILE and MASTER_LOG_POS with the values from your SHOW MASTER STATUS output.

Verify Replication Status

SHOW SLAVE STATUSG

Look for these critical fields:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Seconds_Behind_Master: 0

Last_Error: (should be empty)

If both Slave_IO_Running and Slave_SQL_Running show "Yes", replication is working correctly.

Maintaining Replication in Production

Monitor Replication Lag

Replication lag occurs when the replica falls behind the primary. Monitor Seconds_Behind_Master regularly:

SHOW SLAVE STATUSG | grep Seconds_Behind_Master

Values over 60 seconds indicate potential problems. Common causes include:

- High write load on the primary

- Insufficient replica resources (CPU, disk I/O)

- Network latency between servers

- Large transactions that take time to replay

Manage Binary Log Growth

Binary logs can consume significant disk space. Monitor and purge old logs:

# View current binary logs

SHOW BINARY LOGS;

# Purge logs older than 7 days

PURGE BINARY LOGS BEFORE NOW() - INTERVAL 7 DAY;

# Or purge logs before a specific log file

PURGE BINARY LOGS TO 'mariadb-bin.000100';

Never purge logs that replicas haven't yet processed. Always check replica status first.

Handle Replication Errors

When replication breaks, SHOW SLAVE STATUSG will display the error. Common issues:

Duplicate Key Errors (Error 1062):

# Skip one event

STOP SLAVE;

SET GLOBAL SQL_SLAVE_SKIP_COUNTER = 1;

START SLAVE;

Missing Row Errors (Error 1032): Usually indicates data inconsistency. You may need to re-initialize the replica from a fresh backup.

Regularly Test Failover Procedures

Don't wait for an emergency to test failover. Schedule quarterly failover drills to ensure your team knows the process and your configuration works correctly.

Emergency Failover: Promoting a Replica to Primary

When the primary server fails, you need to promote a replica to become the new primary. Here's the step-by-step process:

Step 1: Verify the Replica is Current

Connect to the replica and check replication status:

SHOW SLAVE STATUSG

Ideally, Seconds_Behind_Master should be 0 or very low. If the primary is completely down, this will show NULL, which is expected.

Step 2: Stop Replication on the Replica

STOP SLAVE;

Step 3: Make the Replica Writable

SET GLOBAL read_only = 0;

Or permanently by editing the configuration file and commenting out read_only = 1, then restarting MariaDB.

Step 4: Point Your Application to the New Primary

Update your application configuration to point to the new primary server (192.168.1.11 in our example). This typically involves:

- Updating database connection strings

- Changing DNS records (if using DNS-based failover)

- Updating load balancer configuration

- Restarting application servers to pick up new configuration

For a WordPress site, you'd update wp-config.php:

define('DB_HOST', '192.168.1.11');

Step 5: Reset Replication State

Clean up replication configuration on the new primary:

RESET SLAVE ALL;

Step 6: Configure Other Replicas (if any)

If you have multiple replicas, point them to the new primary:

STOP SLAVE;

CHANGE MASTER TO

MASTER_HOST='192.168.1.11',

MASTER_USER='replication_user',

MASTER_PASSWORD='your_secure_password_here',

MASTER_PORT=3306,

MASTER_LOG_FILE='mariadb-bin.000001',

MASTER_LOG_POS=328;

START SLAVE;

Step 7: Restore the Original Primary (when recovered)

When the original primary comes back online, you have two options:

Option 1: Make it a replica of the new primary (recommended for stability)

Option 2: Fail back to the original primary (requires careful synchronization)

For Option 1, configure the recovered server as a replica following the steps in "Configure the Replica Server" above.

Proactive Monitoring with Open-Source Tools

Don't wait for replication to fail. Implement proactive monitoring to catch issues early.

1. Percona Monitoring and Management (PMM)

PMM is a comprehensive, free monitoring solution specifically designed for MySQL and MariaDB:

# Install PMM Server (Docker recommended)

docker run -d -p 443:443 --name pmm-server percona/pmm-server:2

# Install PMM Client on each database server

wget https://downloads.percona.com/downloads/pmm2/2.41.0/binary/debian/bullseye/x86_64/pmm2-client_2.41.0-6.bullseye_amd64.deb

sudo dpkg -i pmm2-client_2.41.0-6.bullseye_amd64.deb

# Connect to PMM Server

sudo pmm-admin config --server-insecure-tls --server-url=https://admin:admin@your-pmm-server:443

# Add MySQL monitoring

sudo pmm-admin add mysql --username=pmm_user --password=pmm_password

PMM provides:

- Real-time replication lag monitoring

- Binary log growth tracking

- Query performance analysis

- Visual dashboards for replication topology

- Alerting when replication breaks or lags

2. Custom Monitoring with Prometheus and Grafana

For more control, use Prometheus to scrape metrics and Grafana for visualization:

# Install mysqld_exporter on each database server

wget https://github.com/prometheus/mysqld_exporter/releases/download/v0.15.0/mysqld_exporter-0.15.0.linux-amd64.tar.gz

tar xvf mysqld_exporter-0.15.0.linux-amd64.tar.gz

sudo mv mysqld_exporter-0.15.0.linux-amd64/mysqld_exporter /usr/local/bin/

# Create exporter user in MariaDB

CREATE USER 'exporter'@'localhost' IDENTIFIED BY 'exporter_password';

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'localhost';

# Create systemd service

sudo nano /etc/systemd/system/mysqld_exporter.service

Service file content:

[Unit]

Description=MySQL Exporter

After=network.target

[Service]

Type=simple

User=nobody

Environment="DATA_SOURCE_NAME=exporter:exporter_password@(localhost:3306)/"

ExecStart=/usr/local/bin/mysqld_exporter

Restart=always

[Install]

WantedBy=multi-user.target

Start the exporter:

sudo systemctl daemon-reload

sudo systemctl start mysqld_exporter

sudo systemctl enable mysqld_exporter

Configure Prometheus to scrape the metrics and import a MariaDB dashboard in Grafana.

3. Simple Script-Based Monitoring

For basic monitoring, create a simple bash script:

#!/bin/bash

# Save as /usr/local/bin/check_replication.sh

MYSQL_USER="root"

MYSQL_PASS="your_password"

ALERT_EMAIL="admin@yourdomain.com"

# Check replication status

STATUS=$(mysql -u$MYSQL_USER -p$MYSQL_PASS -e "SHOW SLAVE STATUSG" 2>/dev/null)

IO_RUNNING=$(echo "$STATUS" | grep "Slave_IO_Running:" | awk '{print $2}')

SQL_RUNNING=$(echo "$STATUS" | grep "Slave_SQL_Running:" | awk '{print $2}')

SECONDS_BEHIND=$(echo "$STATUS" | grep "Seconds_Behind_Master:" | awk '{print $2}')

LAST_ERROR=$(echo "$STATUS" | grep "Last_Error:" | cut -d: -f2-)

# Alert if replication is broken

if [[ "$IO_RUNNING" != "Yes" ]] || [[ "$SQL_RUNNING" != "Yes" ]]; then

echo "CRITICAL: Replication is broken on $(hostname)" | mail -s "MariaDB Replication Alert" $ALERT_EMAIL

echo "IO Running: $IO_RUNNING, SQL Running: $SQL_RUNNING"

echo "Error: $LAST_ERROR"

exit 2

fi

# Alert if lag is high

if [[ "$SECONDS_BEHIND" != "NULL" ]] && [[ $SECONDS_BEHIND -gt 60 ]]; then

echo "WARNING: Replication lag is $SECONDS_BEHIND seconds on $(hostname)" | mail -s "MariaDB Replication Lag Warning" $ALERT_EMAIL

exit 1

fi

echo "Replication status OK: $SECONDS_BEHIND seconds behind master"

exit 0

Make it executable and add to cron:

sudo chmod +x /usr/local/bin/check_replication.sh

sudo crontab -e

# Add this line to check every 5 minutes

*/5 * * * * /usr/local/bin/check_replication.sh

4. Using Orchestrator for Automated Failover

Orchestrator is an advanced replication topology manager that can automatically detect failures and promote replicas:

# Install Orchestrator

wget https://github.com/openark/orchestrator/releases/download/v3.2.6/orchestrator_3.2.6_amd64.deb

sudo dpkg -i orchestrator_3.2.6_amd64.deb

# Configure Orchestrator (edit /etc/orchestrator.conf.json)

# Set your database credentials and discovery settings

# Start Orchestrator

sudo systemctl start orchestrator

sudo systemctl enable orchestrator

Orchestrator provides:

- Visual topology maps

- Automatic failure detection

- Automated failover with configurable policies

- Manual failover tools

- Replication lag visualization

Advanced Topics and Best Practices

Using GTID-Based Replication

Global Transaction IDs (GTIDs) make failover easier by uniquely identifying each transaction. When properly configured, you don't need to track binary log positions:

# On replica with GTID enabled

CHANGE MASTER TO

MASTER_HOST='192.168.1.10',

MASTER_USER='replication_user',

MASTER_PASSWORD='your_secure_password_here',

MASTER_USE_GTID=slave_pos;

START SLAVE;

Semi-Synchronous Replication

For critical data, enable semi-synchronous replication to ensure at least one replica acknowledges writes before the primary commits:

# On primary

INSTALL PLUGIN rpl_semi_sync_master SONAME 'semisync_master.so';

SET GLOBAL rpl_semi_sync_master_enabled = 1;

SET GLOBAL rpl_semi_sync_master_timeout = 1000;

# On replica

INSTALL PLUGIN rpl_semi_sync_slave SONAME 'semisync_slave.so';

SET GLOBAL rpl_semi_sync_slave_enabled = 1;

STOP SLAVE IO_THREAD;

START SLAVE IO_THREAD;

Multi-Source Replication

MariaDB supports replicating from multiple primaries simultaneously, useful for consolidating data from multiple databases.

Delayed Replication

Configure a replica with intentional lag to protect against accidental data deletion:

CHANGE MASTER TO MASTER_DELAY = 3600; # 1 hour delay

If someone drops a table accidentally, you have an hour to catch it before the replica executes the same command.

Troubleshooting Common Issues

Issue: Replication won't start

Check error logs: sudo tail -f /var/log/mysql/error.log

Verify network connectivity: telnet 192.168.1.10 3306

Confirm replication user credentials and permissions

Issue: Replication lag increasing

Check replica server resources (CPU, disk I/O, memory)

Review slow queries on primary: SHOW FULL PROCESSLIST;

Consider upgrading replica hardware or using parallel replication

Issue: Binary logs filling disk

Implement automatic purging with expire_logs_days

Monitor disk space and set up alerts

Consider moving binlogs to separate disk partition

Conclusion

MariaDB active-passive replication is a robust solution for high availability and disaster recovery. By following this guide, you've learned how to:

- Configure primary and replica servers with proper binary logging

- Create consistent database snapshots for replication initialization

- Start and verify replication status

- Maintain replication health in production environments

- Perform emergency failovers when the primary fails

- Implement comprehensive monitoring with open-source tools

Remember that replication is not a replacement for proper backups. Always maintain regular backup schedules independent of your replication setup. Replication protects against hardware failure and provides read scalability, but backups protect against data corruption, accidental deletions, and security incidents.

For businesses that need fully managed database replication, monitoring, and failover as part of their infrastructure, explore our disaster recovery solutions and managed hosting services. Our team handles the complexity of high-availability database architecture so you can focus on your application.

If you have questions about implementing MariaDB replication for your specific use case, contact our team for expert guidance.